|

| Other side of the moon |

What is a Clustered Key?

“Clustered indexes sort and store the data rows in the table based on their key values. There can only be one clustered index per table, because the data rows themselves can only be sorted in one order”. To improve performance, reducing the IO overhead is necessary to have a clustered key on almost all tables in a high transactional database. Although there are numerous guidelines and good practices that are available to understand the necessity of an appropriate clustered in a table; the question is how often do we consider those practices while choosing a clustered key?

Pitfall of in-efficient clustered key:

1. Fragmentation: Rapidly introduces more fragmentation.

2. Page Split: A huge number of page allocations and de-allocations happen.

3. Space:Requires more disk & memory, and IO cost will be high.

4. CPU Usage: Observe high CPU due to excessive page split.

5. Slowness: Query response time decreases.

6. Optimization: Index optimization requires more time.

Good Clustered Key:

1. A unique key column is the best candidate for a clustered key.

2. IDENTITY column is a good choice as they are sequential.

3. The column which is used on a JOIN clause.

4. The column used to retrieve data sequentially.

5. The column used in SORT (GROUP or ORDER) operation frequently.

6. Frequently used in range scan (such as BETWEEN, >=, =< )

7. Static Column: such as EmployeeID, SSN.

In-efficient choice for clustered key:

1. Wide Keys: multi-columns key. Such as LastName + FirstName + MiddleName or Address1 + Adddress2 + Phone, so on. “The key values from the clustered index are used by all non-clustered indexes as lookup keys. Any non-clustered indexes defined on the same table will be significantly larger because the non-clustered index entries contain the clustering key and also the key columns defined for that non-clustered index.”

2. GUID:Randomly generated unique values leads to highest possible fragmentation. NEWSEQUENTIALID() can be used instead of NEWID() to create GUID to reduce fragmentation in a table.

3. Data Changes: The column which has frequent value change is not a good choice for a clustered key.

Narrow vs. Wide Clustered Key Test:

Here we will be observing how a wide clustered key introduces performance issues. In our example,

(a) “xID” is the Clustered Key which is a Primary Key and an Identity column.

(b) Later we will create a multi-column clustered key by using “sName1”, “sName2” and “sName3” which are varchar columns.

(c) We will insert 100,000 rows for this test

(d) We will review fragmentation and page split for type of indexes.

DMV Query:

--To check table and index level changes:

SELECT OBJECT_NAME(ios.object_id,ios.database_id)astable_name,

ios.index_id,

si.nameASindex_name,

ios.leaf_insert_count+

ios.leaf_update_count+

ios.leaf_delete_countASleaf_changes,

ios.leaf_allocation_countASleaf_page_splits,

ios.nonleaf_insert_count+

ios.nonleaf_update_count+

ios.nonleaf_delete_countASnonleaf_changes,

ios.nonleaf_allocation_countASnonleaf_page_splits,

(ios.range_scan_count+ios.leaf_insert_count

+ios.leaf_delete_count+ios.leaf_update_count

+ios.leaf_page_merge_count+ios.singleton_lookup_count

)total_changes

FROM sys.dm_db_index_operational_stats(DB_ID(),NULL,NULL,NULL)ios

JOINsys.objectssoONso.object_id=ios.object_id

JOINsys.indexessiONsi.object_id=ios.object_id

ANDsi.index_id=ios.index_id

JOINsys.schemasssONso.schema_id=ss.schema_id

WHERE OBJECTPROPERTY(ios.object_id,'IsUserTable')= 1

ORDERBYleaf_changesDESC

--To check index fragmentation:

SELECT a.index_id,

b.nameAS[object_name],

CONVERT(NUMERIC(5,2),a.avg_fragmentation_in_percent)pct_avg_fragmentation

FROM sys.dm_db_index_physical_stats(DB_ID(),NULL,NULL,NULL,NULL)ASa

JOINsys.indexesASbONa.object_id=b.object_id

ANDa.index_id=b.index_id;

Script to test:

CREATEDATABASETestDB

GO

USE[TestDB]

GO

CREATETABLE[tblLarge](

[xID][int]IDENTITY(1,1)NOTNULL,

[sName1][varchar](10)DEFAULT'ABC'NOTNULL,

[sName2][varchar](13)DEFAULT'ABC'NOTNULL,

[sName3][varchar](36)DEFAULT'ABC'NOTNULL,

[sIdentifier][char](2)NULL,

[dDOB][date]NULL,

[nWage][numeric](12, 2)NULL,

[sLicense][char](7)NULL,

[bGender][bit]NULL

)ON[PRIMARY]

GO

-- Clustered key on xID

ALTERTABLEtblLargeADDCONSTRAINTPK_tblLarge

PRIMARYKEYCLUSTERED (xID)WITH (FILLFACTOR=90)

GO

-- DROP constraint

ALTERTABLE[dbo].[tblLarge]DROPCONSTRAINT[PK_tblLarge]

GO

-- Multi-column clustered key

ALTERTABLEtblLargeADDCONSTRAINTPK_tblLarge

PRIMARYKEYCLUSTERED (sName1,sName2,sName3)WITH (FILLFACTOR=90)

GO

-- Insert 100,000 records

INSERT INTOtblLarge

(sName1,

sName2,

sName3,

sIdentifier,

dDOB,

nWage,

sLicense,

bGender

)

VALUES (LEFT(CAST(NEWID()ASVARCHAR(36)), 8),

LEFT(CAST(NEWID()ASVARCHAR(36)), 13),

CAST(NEWID()ASVARCHAR(36)),

LEFT(CAST(NEWID()ASVARCHAR(36)), 2),

DATEADD(dd,-RAND()* 20000,GETDATE()),

(RAND()* 1000 ),

SUBSTRING(CAST(NEWID()ASVARCHAR(36)), 6, 7),

COALESCE(ISNUMERIC(LEFT(CAST(NEWID()ASVARCHAR(36)),1)),0))

GO 100000

Fragmentation Comparison:

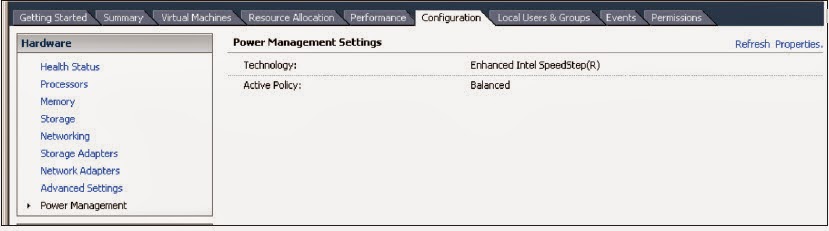

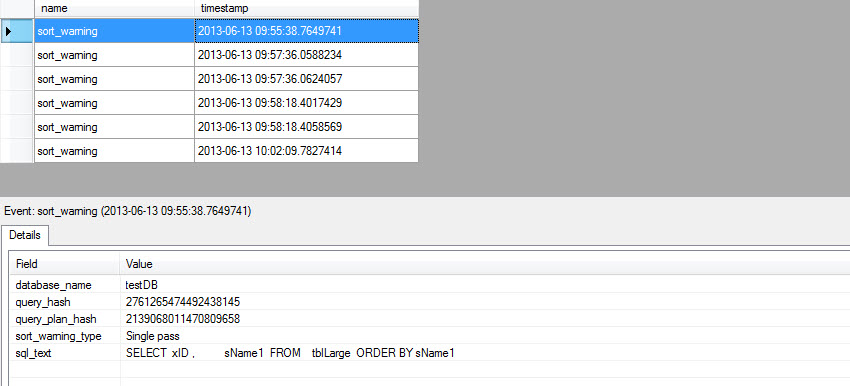

As you can see from the following picture given below that the fragmentation and page split has been increased dramatically when wide key has been used.

Figure#1: Narrow clustered key

Figure#2: Multi-column clustered key

Conclusion:

While using wide key or multi-columns for clustered index is supported by SQL Server, but we should not overlook the dangerous performance consequences that occurs silently.

Clustered Index Design Guidelines