Data compression is one of the most appreciated features of SQL Server. However, many of us overlook the benefits of data compression in terms of storage and query performance. If applied correctly, we could have save up to 30% disk space and speed up the query by at least 10 times. Although, there will be a penalty of 2% to 5% of increased CPU usage while compressing or decompressing pages, the gain is far more significant than adding some negligible processor usage.

Data Compression Setting:

Data compression is a feature of Enterprise Edition only and there is no any specific or particular server or database wide setting that can be turned on to implement the feature either server wide or on a per database basis. I think that the folks at Microsoft should consider implementing database compression feature settings at a database level so that we can use this feature more effectively.

What to compress?

Data compression needs to be applied individually on a per table or index basis and it can be applied to a heap table, clustered index, non-clustered index, indexed view, columnstore table and an index. For example, a clustered index table might have four non-clustered indexes and one nonclustered columnstore index. Thus you can have “PAGE” level compression on the clustered index, “ROW” level compression on the nonclustered indexes, “PAGE” level compression on the nonclustered indexes and “COLUMNSTORE_ARCHIVE” on a nonclustered columnstore index.

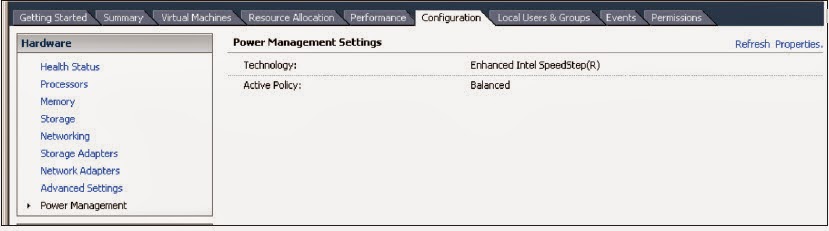

Figure#1: Different data compression settings on a table:

Some Good Points from MSDN:

1. Enabling compression can cause query plans to change because the data is stored using a different number of pages and number of rows per page.

2. Compression does not affect backup and restore.

3. Compression does not affect log shipping.

4. Compression has some affect and consideration on replication.

5. Data compression is incompatible with sparse columns.

6. When data is exported, even in native format, the data is output in the uncompressed row format.

7. When data is imported, if the target table has been enabled for compression, the data is converted by the storage engine into compressed row format.

8. When a clustered index is dropped, the corresponding heap partitions retain their data compression setting unless the partitioning scheme is modified. If the partitioning scheme is changed, all partitions are rebuilt to an uncompressed state.

9. Columnstore tables and indexes are always stored with columnstore compression. To reduce the size of columnstore data further, configuring an additional compression called archival compression can be used. It may slow down the query execution and introduce high resource utilization.

10. Compression is not available for system tables.

11. A clustered index or Heap table can have different types of data compression levels.

12. Regular index settings/options can be used with clustered or nonclustered indexes.

13. Clustered or nonclustered columnstore indexes have fewer index settings.

Script to check data compression level:

Following is a handy script to check what has already been compressed and what has not:

/************************************************************

* Check data compression level

************************************************************/

SELECT SCHEMA_NAME(o.schema_id)+'.'+OBJECT_NAME(o.object_id)AS table_name,

o.type_desc,

i.name ASindex_name,

p.data_compression_desc AScompression_type,

i.type_desc ASstorage_type

FROM sys.partitions p

INNERJOINsys.objects o

ON p.object_id= o.object_id

JOINsys.indexes i

ON p.object_id= i.object_id

ANDi.index_id = p.index_id

-- WHERE p.data_compression> 0

-- ANDOBJECT_NAME(o.object_id)='Address1'

You can also get the similar information by using my free tool.

Figure#2: Different data compression settings on a table:

Some T-SQL Script to compress data in a database:

(a) Compress all HEAP in a database:

/************************************************************

* Data compression on HEAP

************************************************************/

SELECT'ALTER TABLE ['+SCHEMA_NAME(o.schema_id)+'].['+ o.[name]

+'] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)'

FROM sys.objects o

JOINsys.indexes i

ON o.[object_id] = i.[object_id]

WHERE o.[type_desc] ='USER_TABLE'

AND i.type_desc IN('HEAP')

(b) Compress all CLUSTERED index tables:

/************************************************************

* Data compression on a CLUSTERED index table and underlying indexes

************************************************************/

SELECT'ALTER INDEX ALL ON ['+SCHEMA_NAME(o.schema_id)+'].['+ o.[name]

+'] REBUILD WITH (DATA_COMPRESSION = PAGE)'

FROM sys.objects o

JOINsys.indexes i

ON o.[object_id] = i.[object_id]

WHERE o.[type_desc] ='USER_TABLE'

AND i.type_desc IN('CLUSTERED')

(c) Compress all NONCLUSTERED indexes:

/************************************************************

* Data compression on nonClustered index

************************************************************/

SELECT'ALTER INDEX ['+ i.[name] +']'+' ON ['+SCHEMA_NAME(o.schema_id)+'].['+ o.[name]

+'] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)'

FROM sys.objects o

JOINsys.indexes i

ON o.[object_id] = i.[object_id]

WHERE o.[type_desc] ='USER_TABLE'

AND i.type_desc IN('NONCLUSTERED')

(d) Compress all CLUSTERED COLUMNSTORE tables:

/************************************************************

* Data compression on Clustered columnstore index

************************************************************/

SELECT'ALTER TABLE ['+SCHEMA_NAME(o.schema_id)+'].['+ o.[name]

+'] REBUILD PARTITION = ALL WITH (MAXDOP = 16, DATA_COMPRESSION = COLUMNSTORE_ARCHIVE)'

FROM sys.objects o

JOINsys.indexes i

ON o.[object_id] = i.[object_id]

WHERE o.[type_desc] ='USER_TABLE'

AND i.type_desc IN('CLUSTERED COLUMNSTORE')

(e) Compress all NONCLUSTERED COLUMNSTORE indexes:

/************************************************************

* Data compression on nonClustered columnstore index

************************************************************/

SELECT'ALTER INDEX ['+ i.[name] +']'+' ON ['+SCHEMA_NAME(o.schema_id)+'].['+ o.[name]

+'] REBUILD PARTITION = ALL WITH (MAXDOP = 16, DATA_COMPRESSION = COLUMNSTORE_ARCHIVE)'

FROM sys.objects o

JOINsys.indexes i

ON o.[object_id] = i.[object_id]

WHERE o.[type_desc] ='USER_TABLE'

AND i.type_desc IN('NONCLUSTERED COLUMNSTORE')

(f) Data compression with different index settings:

/****************************************************************

* Data compression on all nonclustered indexes

*****************************************************************/

DECLARE @table_name ASVARCHAR(256)

DECLARE @index_name ASVARCHAR(256)

DECLARE @schema_name ASVARCHAR(256)

DECLARE @type_desc ASVARCHAR(50)

DECLARE CurCompress CURSOR

FOR

SELECTSCHEMA_NAME(o.[schema_id]) AS[schema_name],

o.[name] AS[table_name],

i.[name] AS[index_name],

i.[type_desc]

FROM sys.objects o

JOINsys.indexes i

ON o.[object_id] = i.[object_id]

WHERE o.[type_desc] ='USER_TABLE'

ORDERBY

o.[name]

OPEN CurCompress

FETCHNEXTFROM CurCompress INTO@schema_name, @table_name, @index_name,@type_desc

WHILE@@fetch_status= 0

BEGIN

IF@type_desc ='NONCLUSTERED'

BEGIN

PRINT'/****************************************************************************************'

PRINT'* Data compression for the table: '+ @table_name

PRINT'****************************************************************************************/'

PRINT'ALTER INDEX ['+@index_name +'] ON ['+ @schema_name +'].['+@table_name +'] '

PRINT'REBUILD WITH ('

PRINT' FILLFACTOR = 95,'

PRINT' SORT_IN_TEMPDB = OFF,'

PRINT' STATISTICS_NORECOMPUTE = OFF,'

PRINT' ONLINE = OFF,'

PRINT' ALLOW_ROW_LOCKS = ON,'

PRINT' ALLOW_PAGE_LOCKS = ON,'

PRINT' MAXDOP = 16,'

PRINT' DATA_COMPRESSION = PAGE'

PRINT')'

PRINT'GO'

PRINT'PRINT ''Compression completed on - '+ @table_name +''''

PRINT''

END

IF@type_desc ='NONCLUSTERED COLUMNSTORE'

BEGIN

PRINT'/****************************************************************************************'

PRINT'* Data compression for the table: '+ @table_name

PRINT'****************************************************************************************/'

PRINT'ALTER INDEX ['+@index_name +'] ON ['+ @schema_name +'].['+@table_name +'] '

PRINT'REBUILD WITH ('

PRINT' MAXDOP = 16,'

PRINT' DATA_COMPRESSION = COLUMNSTORE_ARCHIVE'

PRINT')'

PRINT'GO'

PRINT'PRINT ''Compression completed on - '+ @table_name +''''

PRINT''

END

FETCHNEXTFROM CurCompress INTO @schema_name,@table_name, @index_name, @type_desc

END

CLOSE CurCompress

DEALLOCATE CurCompress

References:

Data Compression

Data Compression: Strategy, Capacity Planning and Best Practices

ALTER INDEX (Transact-SQL)

SQL Server Data Compression – Beauty or Beast?